Hi guys,

I’m currently experimenting with the Google NLP API in Streamlit, seeking to limit the number of API calls in order to optimise costs. (app users will have to upload their own GCP credentials, so cost optimisation is key. :))

Currently, each time I move e.g. a slider, the full codebase is re-run and API costs occur accordingly.

There are 2 functions related to these API calls:

@st.cache(allow_output_mutation=True)

def sample_analyze_entities(html_content):

client = language_v1.LanguageServiceClient()

type_ = enums.Document.Type.HTML #you can change this to be just text; doesn't have to be HTML.

language = "en"

document = {"content": html_content, "type": type_, "language": language}

encoding_type = enums.EncodingType.UTF8

response = client.analyze_entities(document, encoding_type=encoding_type)

return response

@st.cache(allow_output_mutation=True)

def return_entity_dataframe(response):

output = sample_analyze_entities(response.data)

output_list = []

for entity in output.entities:

entity_dict = {}

entity_dict['entity_name'] = entity.name

entity_dict['entity_type'] = enums.Entity.Type(entity.type).name

entity_dict['entity_salience('+response._request_url+')'] = entity.salience

entity_dict['entity_number_of_mentions('+response._request_url+')'] = len(entity.mentions)

output_list.append(entity_dict)

json_entity_analysis = json.dumps(output_list)

df = pd.read_json(json_entity_analysis)

summed_df = df.groupby(['entity_name']).sum()

summed_df.sort_values(by=['entity_salience('+response._request_url+')'], ascending=False)

return summed_df

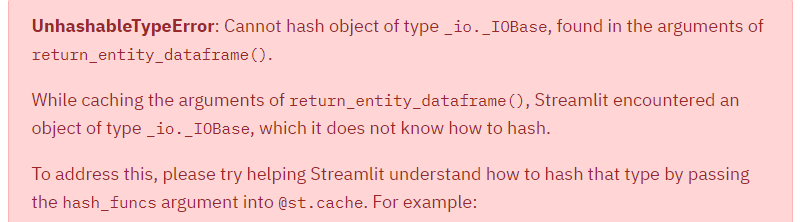

I tried @st.cache(allow_output_mutation=True) on both, yet no luck - throwing the UnhashableTypeError error:

I tried various st.cache parameters, yet every time get the above error.

Any idea on how to overcome this issue?

Very grateful for your help, as always!

Thanks,

Charly