This is awesome!

Awesome work whitphx!

I would like to ask one thing, is it possible to take photos with this component? I am building an app for mobile where I need the flexibility of selecting the device but I just need to take photos.

Thanks in advance!

Thank you!

For you question, I think the answer is yes.

You can access the frame arrays in the transform() method of your VideoTransformer.

Please check the code below. I hope it meets your needs.

Note that these snapshots are taken on the server-side, not on the frontend,

then if there is a network delay, there will be a delay in when the photos are taken.

import threading

from typing import Union

import av

import numpy as np

import streamlit as st

from streamlit_webrtc import VideoTransformerBase, webrtc_streamer

def main():

class VideoTransformer(VideoTransformerBase):

frame_lock: threading.Lock # `transform()` is running in another thread, then a lock object is used here for thread-safety.

in_image: Union[np.ndarray, None]

out_image: Union[np.ndarray, None]

def __init__(self) -> None:

self.frame_lock = threading.Lock()

self.in_image = None

self.out_image = None

def transform(self, frame: av.VideoFrame) -> np.ndarray:

in_image = frame.to_ndarray(format="bgr24")

out_image = in_image[:, ::-1, :] # Simple flipping for example.

with self.frame_lock:

self.in_image = in_image

self.out_image = out_image

return out_image

ctx = webrtc_streamer(key="snapshot", video_transformer_factory=VideoTransformer)

if ctx.video_transformer:

if st.button("Snapshot"):

with ctx.video_transformer.frame_lock:

in_image = ctx.video_transformer.in_image

out_image = ctx.video_transformer.out_image

if in_image is not None and out_image is not None:

st.write("Input image:")

st.image(in_image, channels="BGR")

st.write("Output image:")

st.image(out_image, channels="BGR")

else:

st.warning("No frames available yet.")

if __name__ == "__main__":

main()

Thanks so much for the prompt response whitphx.

Really appreciate the code and the note. Again, awesome work on your end and a great contribution to streamlit community.

Hello Community. Thank you so much @whitphx for this wonderful contribution.

I’m making a face-recognition app and deploying it on heroku but opencv isnt working on heroku. My app runs fine locally. The app should be able to detect faces in the videostream when an existing user in the database starts webcam.

I’MTRYING TO FIGURE OUT HOW TO DO THIS USING STREAMLIT-WEBRTC

the lines in the code below that are erroneous have a comment “#this line”. Basically i need to draw rectangles on a face if known user is found in a frame and i need to access one frame in the webcam videostream (to run the while loop) to identify known faces.

Can @whitphx @soft-nougat or someone else please help me out!

Thanks a ton in advance!

This is the error that I’m getting but the same code works fine locally

This is error on heroku log

2021-05-06T09:31:16.531348+00:00 app[web.1]: [INFO] loading encodings + face detector…

2021-05-06T09:31:16.625860+00:00 app[web.1]: [INFO] starting video stream…

2021-05-06T09:31:16.626472+00:00 app[web.1]: [ WARN:1] global /tmp/pip-req-build-ddpkm6fn/opencv/modules/videoio/src/cap_v4l.cpp (893) open VIDEOIO(V4L2:/dev/video0): can’t open camera by index

Link to the github repo of the app:

import streamlit as st

import numpy as np

import pickle

import time

import cv2

import imutils

from imutils.video import VideoStream

from imutils.video import FPS

import face_recognition

st.write("""

# Face Recognition App

""")

#Initialize 'currentname' to trigger only when a new person is identified.

currentname = "unknown"

#Determine faces from encodings.pickle file model created from train_model.py

encodingsP = "encodings.pickle"

#use this xml file

#https://github.com/opencv/opencv/blob/master/data/haarcascades/haarcascade_frontalface_default.xml

cascade = "haarcascade_frontalface_default.xml"

# load the known faces and embeddings along with OpenCV's Haar

# cascade for face detection

print("[INFO] loading encodings + face detector...")

st.write("[INFO] loading encodings + face detector...")

data = pickle.loads(open(encodingsP, "rb").read())

detector = cv2.CascadeClassifier(cascade)

# initialize the video stream and allow the camera sensor to warm up

print("[INFO] starting video stream...")

st.write("[INFO] starting video stream...")

vs = VideoStream(src=0).start() #this line

#vs = VideoStream(usePiCamera=True).start()

time.sleep(1.0)

# start the FPS counter

fps = FPS().start()

# loop over frames from the video file stream

names = []

while True:

# grab the frame from the threaded video stream and resize it

# to 500px (to speedup processing)

frame = vs.read() #this line

frame = imutils.resize(frame, width=500)

# convert the input frame from (1) BGR to grayscale (for face

# detection) and (2) from BGR to RGB (for face recognition)

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

rgb = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB)

# detect faces in the grayscale frame

rects = detector.detectMultiScale(gray, scaleFactor=1.1,

minNeighbors=5, minSize=(30, 30),

flags=cv2.CASCADE_SCALE_IMAGE)

# OpenCV returns bounding box coordinates in (x, y, w, h) order

# but we need them in (top, right, bottom, left) order, so we

# need to do a bit of reordering

boxes = [(y, x + w, y + h, x) for (x, y, w, h) in rects]

# compute the facial embeddings for each face bounding box

encodings = face_recognition.face_encodings(rgb, boxes)

#names = []

# loop over the facial embeddings

for encoding in encodings:

# attempt to match each face in the input image to our known

# encodings

matches = face_recognition.compare_faces(data["encodings"],

encoding)

name = "Unknown" #if face is not recognized, then print Unknown

# check to see if we have found a match

if True in matches:

# find the indexes of all matched faces then initialize a

# dictionary to count the total number of times each face

# was matched

matchedIdxs = [i for (i, b) in enumerate(matches) if b]

counts = {}

# loop over the matched indexes and maintain a count for

# each recognized face face

for i in matchedIdxs:

name = data["names"][i]

counts[name] = counts.get(name, 0) + 1

# determine the recognized face with the largest number

# of votes (note: in the event of an unlikely tie Python

# will select first entry in the dictionary)

name = max(counts, key=counts.get)

#If someone in your dataset is identified, print their name on the screen

#if currentname != name:

#currentname = name

#print(name)

# update the list of names

names.append(name)

# loop over the recognized faces

for ((top, right, bottom, left), name) in zip(boxes, names):

# draw the predicted face name on the image - color is in BGR

cv2.rectangle(frame, (left, top), (right, bottom),

(0, 255, 0), 2)

y = top - 15 if top - 15 > 15 else top + 15

cv2.putText(frame, max(set(names), key = names.count), (left, y), cv2.FONT_HERSHEY_SIMPLEX,

.8, (255, 0, 0), 2)

# display the image to our screen

cv2.imshow("Facial Recognition is Running", frame)

key = cv2.waitKey(1) & 0xFF

# quit when 'q' key is pressed

if key == ord("q") or len(names)==30:

break

# update the FPS counter

fps.update()

#print name of person identified and accuracy

#max([(name,names.count(name)) for name in set(names)],key=lambda x:x[1])

print("person identified : ", max(set(names), key = names.count))

print([(name,names.count(name)) for name in set(names)])

st.write(names)

st.write("person identified : ", max(set(names), key = names.count))

st.write([(name,names.count(name)) for name in set(names)][0])

# stop the timer and display FPS information

fps.stop()

print("[INFO] elasped time: {:.2f}".format(fps.elapsed()))

print("[INFO] approx. FPS: {:.2f}".format(fps.fps()))

st.write("[INFO] elasped time: {:.2f}".format(fps.elapsed()))

st.write("[INFO] approx. FPS: {:.2f}".format(fps.fps()))

# do a bit of cleanup

cv2.destroyAllWindows()

vs.stop()Hi @inkarar, welcome to the Streamlit community!

Could you also post any errors messages that you see in your logs on heroku? It might help folks on here understand the technical reasons as to why “opencv isn’t working”

Happy Streamlit-ing!

Snehan

I think you are subject to a common misunderstanding about how streamlit works.

With streamlit, all interaction must be done through the browser or browser APIs. Therefore, all interaction needs some streamlit component. Anything you try to do past that will fail at the latest when hosting streamlit on a server. Your application just happens to work on the local machine because the “server” and “client” are identical.

As far as I know you can’t use imutils.Videocapture together with streamlit this way. The problem is that it tries to access the video camera through the hardware of the computer. This works locally, but not on the server, because there is no connected video camera and not even a driver for it.

@Franky1

Thank you for your response. I understand what I am doing wrong here.

But as I understand streamlit-webrtc can access my webcam from heroku server

So i just need to be able to use streamlit-webrtc to capture frames from live stream and then find a match against a database.

I think this can be done via streamlit-webrtc and I’m asking for any and all help for the same becasue I’m new to streamlit and streamlit-webrtc. Thank you!!!

Follow the tutorial or read the code of the sample app, which I posted above in this thread. I think there is sufficient information to use this component.

In addition, there are many repos using streamlit-webrtc: Network Dependents · whitphx/streamlit-webrtc · GitHub You can also refer to the source code of those projects.

@Franky1 , thank you for your answer.

His answer is totally correct, and streamlit-webrtc has been developed to solve that problem. For the details about it, see the first post in this thread or the introduction part of this blog post

Hi community,

I released a new version of streamlit-webrtc, v0.20.0, with (experimental) audio support.

I added some samples to the example app which deal with audio streams: https://share.streamlit.io/whitphx/streamlit-webrtc-example/main/app.py

However I don’t have experience of audio/signal processing and some samples are just copied from the web… I want someone who are familiar with this field to help create better examples or consider more useful API

I also created a real time Speech-to-Text app: https://share.streamlit.io/whitphx/streamlit-stt-app/main/app_deepspeech.py , which I think is very impressive example utilizing audio capability of this new version of streamlit-webrtc (The STT functionality is based on DeepSpeech).

(The generated text is not much precise probably because of my non-native English and sound environment such as microphone  )

)

The source code is here.

For developers who have been using streamlit-webrtc, please note that some breaking changes have been introduced in this version. VideoTransformerBase class and its transform() method are now deprecated and will be removed. Please use VideoProcessorBase and its recv() instead. For further info, check app.py.

Ok now I reaaally need to delve into this  audio streams!

audio streams!

There’s a topic on this forum about Guitar Chord recognition I’ve been dying to solve forever: Selecting audio input or output device and I might finally be able to provide an answer

Thanks for you hard work @whitphx

Fanilo

Hello developer,

I love your real-time webcam video streaming functionality by using streamlit-webrtc. I saw the experiment you did with the Neural Style Transfer app by Harsh Malra and I’m really astonished about this functionality.

I try this feature in my pose detection ML model but it didn’t actually work out. If it works with a real-time webcam video streaming feature, it would become the best version. I wish if you could help me with the same  .

.

This is the source code for my app named self'it.

My Work:

Hi I have build an app which predict yoga pose but there is problem regarding webcam

while running on local server with OpenCV I am able to track my pose but after implementing

streamlit-webrtc

Issue has been generated it cannot open Camera feed

Detail Error:

Connection(0) Check CandidatePair((‘192.168.0.102’, 51048) → (‘103.132.3.54’, 61749)) State.IN_PROGRESS → State.FAILED

2021-06-09 12:33:44.326 Connection(0) ICE failed

2021-06-09 12:33:44.360 ICE connection state is failed

2021-06-09 12:33:44.362 ICE connection state is closed

I am just Enjoying the process to bulid this app and appreciate the Work of streamlit team and also

@whitphx for creating streamlit-webrtc

Waiting for answer

Thankyou

@iamchoudhari11

Hi,

it seems this problem can occur in some environments and there have been some reports like Issue about Video Recording · Issue #151 · whitphx/streamlit-webrtc · GitHub

I think this is because the WebRTC packets are dropped between the server and clients.

- Can you use the app locally? If so, the problem resides on the network between your local env and the remote host.

- Are you using firewall software which drops some packets, or are you inside a network with security like office or campus networks where some communications are blocked?

- Check if the app works when your client machine is in another network especially where there is no network blockings.

@Shalini-lodhi

Hi

I could not find any code using streamlit-webrtc in that repository.

What is your problem, or where is the code on which you have a problem with this component?

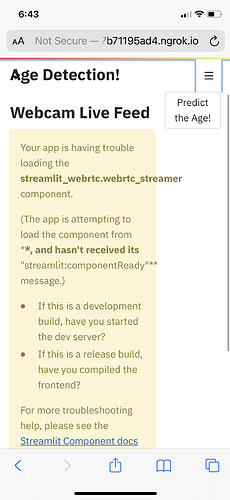

@whitphx anytime I try accessing the camera for my app this is the error I get .Any help please ?

OK,

Please create a new GitHub issue on the repository and share the complete codebase which I can run to reproduce the bug.

Can you please indicate for me the exact part where i cn use only the microphone as an input without using the camera . Thank you

You can find the sound-only demo and its related code in app.py in the repo.

The Speech-to-Text app posted above can also be an example as it has sound-only mode.

@iamchoudhari11 I have a similar type of error when I deploy my app in streamlit sharing. Did you find any solution for it?

@whitphx Can you please help in solving this error? My source code is at repo