Hi, let me just clarify that this is an issue which only occurred when I deploy the streamlit app in AWS Fargate. It is working fine in local and AWS EC2 instance. However, I have unable to find a solution so that I can debug, and wondering if the community can help.

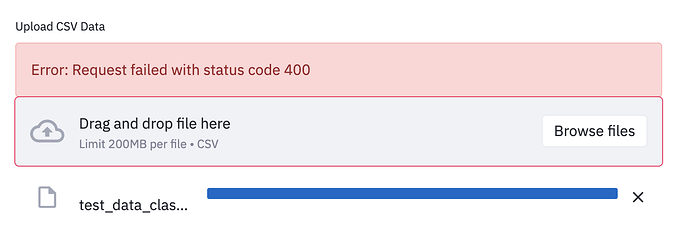

The error came about from a 400 bad request when re-uploading a CSV file. The file is only of size 4kb. The error is intermittent, and seem to work only if I manually click on the “x” button and then refresh the browser.

Checks on the logs revealed that there was issues removing the previous file in session, with the error “RecursionError: maximum recursion depth exceeded”.

2021-02-01T17:23:11.829+08:00 File "/usr/local/lib/python3.7/site-packages/streamlit/uploaded_file_manager.py", line 178, in remove_files

2021-02-01T17:23:11.829+08:00

self._remove_files(session_id, widget_id)

self._remove_files(session_id, widget_id)

2021-02-01T17:23:11.829+08:00

File "/usr/local/lib/python3.7/site-packages/streamlit/uploaded_file_manager.py", line 163, in _remove_files

File "/usr/local/lib/python3.7/site-packages/streamlit/uploaded_file_manager.py", line 163, in _remove_files

2021-02-01T17:23:11.829+08:00

self.update_file_count(session_id, widget_id, 0)

self.update_file_count(session_id, widget_id, 0)

2021-02-01T17:23:11.829+08:00

File "/usr/local/lib/python3.7/site-packages/streamlit/uploaded_file_manager.py", line 229, in update_file_count

File "/usr/local/lib/python3.7/site-packages/streamlit/uploaded_file_manager.py", line 229, in update_file_count

2021-02-01T17:23:11.829+08:00

self._on_files_updated(session_id, widget_id)

self._on_files_updated(session_id, widget_id)

2021-02-01T17:23:11.829+08:00

File "/usr/local/lib/python3.7/site-packages/streamlit/uploaded_file_manager.py", line 81, in _on_files_updated

File "/usr/local/lib/python3.7/site-packages/streamlit/uploaded_file_manager.py", line 81, in _on_files_updated

2021-02-01T17:23:11.829+08:00

self.on_files_updated.send(session_id)

self.on_files_updated.send(session_id)

2021-02-01T17:23:11.829+08:00

File "/usr/local/lib/python3.7/site-packages/blinker/base.py", line 267, in send

File "/usr/local/lib/python3.7/site-packages/blinker/base.py", line 267, in send

2021-02-01T17:23:11.829+08:00

for receiver in self.receivers_for(sender)]

for receiver in self.receivers_for(sender)]

2021-02-01T17:23:11.829+08:00

File "/usr/local/lib/python3.7/site-packages/blinker/base.py", line 267, in <listcomp>

File "/usr/local/lib/python3.7/site-packages/blinker/base.py", line 267, in <listcomp>

2021-02-01T17:23:11.829+08:00

for receiver in self.receivers_for(sender)]

for receiver in self.receivers_for(sender)]

2021-02-01T17:23:11.829+08:00

File "/usr/local/lib/python3.7/site-packages/streamlit/server/server.py", line 266, in on_files_updated

File "/usr/local/lib/python3.7/site-packages/streamlit/server/server.py", line 266, in on_files_updated

2021-02-01T17:23:11.829+08:00

self._uploaded_file_mgr.remove_session_files(session_id)

self._uploaded_file_mgr.remove_session_files(session_id)

2021-02-01T17:23:11.829+08:00 File "/usr/local/lib/python3.7/site-packages/streamlit/uploaded_file_manager.py", line 196, in remove_session_files

2021-02-01T17:23:11.829+08:00

self.remove_files(*files_id)

self.remove_files(*files_id)

2021-02-01T17:23:11.829+08:00

RecursionError: maximum recursion depth exceeded

The code snippet is as below. I tried to define a high recursion limit but it has no use.

import sys

import pandas as pd

import streamlit as st

sys.setrecursionlimit(10000)

def main(url):

st.title('MVP Demo Site')

uploaded_file = st.file_uploader("Upload CSV Data", type=['csv'])

Help?